Struggling to figure out if AI detectors can catch reused drafts? These tools claim to spot repetitive or AI-generated content, but they’re not perfect. This blog will explore how these detectors work, their limits, and what you need to know.

Keep reading to find out the real deal!

Key Takeaways

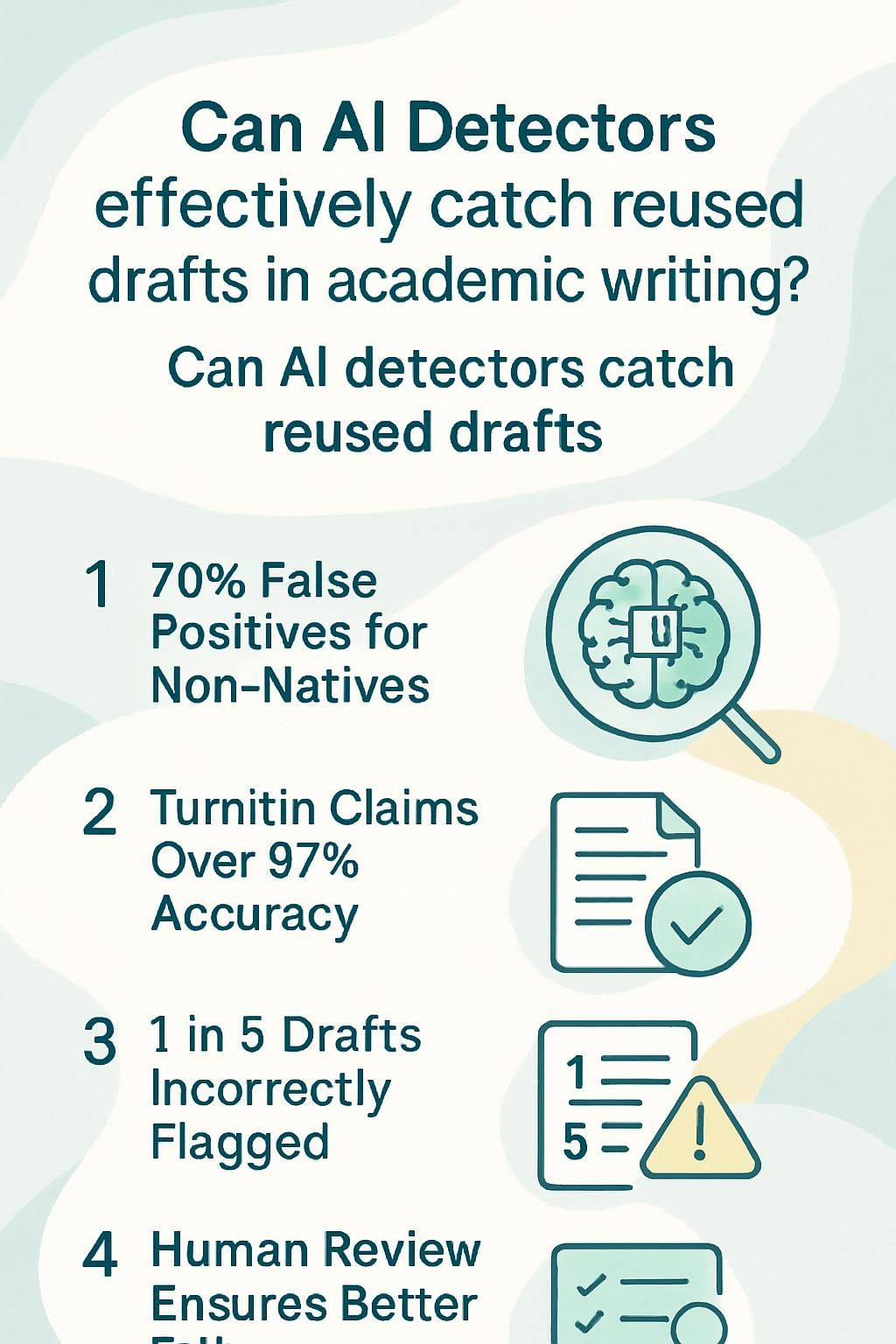

- AI detectors check patterns and probabilities to flag reused or AI-generated content, but they often miss subtle rewrites and edits.

- False positives are common, with non-native English speakers flagged 70% of the time and even famous texts like the US Constitution misidentified.

- Leading tools like Turnitin claim over 97% accuracy but still face issues with false positives and overlooked reused drafts.

- Real-world tests show detection tools struggle, with one in five documents wrongly flagged; human review is needed for fairness.

- Best practices include combining AI analysis with human judgment, educating students on detectors, and avoiding complete reliance on these tools alone.

How AI Detectors Work

AI detectors check patterns in text. They use machine learning to spot signs of artificial writing.

Algorithms and language patterns

Algorithms in AI detectors look for patterns. They compare your text against databases and try to spot repeated phrases or structures. For example, reused drafts might follow a similar flow or style as older ones.

These tools rely on natural language processing (NLP) to study grammar, sentence length, and word choice.

‘AI-written text often overuses complex words,’ say experts.

AI can detect repeating ideas or unnatural phrasing with this method. It checks how likely it is that certain words appear together too often. This helps highlight parts of the writing process where academic dishonesty may occur by using old content again without making real changes.

Probability-based detection

AI detection software often uses probability models to spot ai-generated content. These tools analyze word choices, sentence structure, and patterns in writing. If the text fits a known AI’s style, like OpenAI’s chatbot outputs, it raises flags.

False positives happen with complex or formal texts. For example, the US Constitution has been flagged by these systems. Non-native English writers face higher risks too. Up to 70% of their work can wrongly trigger detection due to differing language patterns.

This flaw highlights bias in existing technologies within academic writing processes.

Can AI Detectors Identify Reused Drafts?

AI detectors often struggle with reused drafts because they focus on patterns, not intent. Small edits or rephrased sentences can slip past detection tools easily.

Challenges with detecting reused content

Detecting reused drafts can be tricky. AI detection software often struggles with subtle rewrites or paraphrasing of previous work. Many tools rely on patterns or probabilities, which fail to catch clever edits made by humans.

A student could change sentences slightly, and the system might miss these changes completely.

False positives also create problems. Misidentification rates can hit 20%, flagging honest work as reused content unfairly. This damages trust in artificial intelligence and raises questions about its reliability in academic settings.

“No tool is perfect,” said one researcher in early 2023, emphasizing the need for human judgment alongside AI-powered systems.

Limitations of current AI detection tools

AI detection software struggles with false positives. Non-native English speakers often face this issue; they get flagged wrongfully 70% of the time. Even famous texts like the US Constitution and parts of the Bible have been mistakenly labeled as AI-generated content.

These detectors also fail at catching subtle rewrites or edits in reused drafts. They rely on patterns and probabilities, so small changes can trick them easily. Their maximum accuracy caps at around 80%, leaving plenty of room for errors in academic writing cases.

Reliability of AI Detectors

AI detectors promise accuracy, but their results can be hit or miss. They often struggle to separate reused drafts from simple writing habits.

Claims made by leading AI detectors

AI detection tools claim high accuracy and reliability. They often promise to catch reused drafts quickly and efficiently.

- Turnitin, a popular tool available on platforms like Canvas, claims to detect AI-generated content with over 97% accuracy. It flags patterns or styles that resemble AI writing tools.

- Companies behind these detectors assert they can spot both reused content and brand-new AI-written text by analyzing unique language markers and probability models.

- Many detection programs highlight their ability to reduce academic dishonesty by tracking repeated phrases or unusual structuring in writing.

- False positives remain a concern despite these claims, as even creative human writing sometimes triggers alerts in AI detectors.

- Some businesses state their software evolves rapidly to keep up with new trends in AI-generated text, though no tool is perfect yet.

- Developers argue that combining AI analysis with human intelligence improves academic integrity while ensuring fairness in reviews.

Accuracy vs. reality in reused draft detection

Claims about AI content detectors often paint a rosy picture, but the reality hits differently. These tools boast up to 80% accuracy, yet real-world tests reveal cracks. For instance, one in five documents gets misidentified.

This means students or writers using legitimate sources could face false positives unfairly. On the flip side, reused drafts might go unnoticed due to false negatives.

Top universities like Vanderbilt have already dropped certain detection software after issues with reliability surfaced. An over-reliance on algorithms alone creates blind spots in academic integrity checks.

Machines struggle to separate reused ideas from natural writing patterns, leaving gaps that human review must fill.

Best Practices for Using AI Detectors

Pair AI detectors with your own judgment to get better results. Don’t lean entirely on software—it’s just a tool, not a magic wand.

Combining AI tools with human review

AI detection software works well, but it has flaws. Combining it with human review strengthens its reliability and fairness.

- Compare the flagged text to a student’s previous work. Check style, tone, and complexity. This helps spot inconsistencies that AI might miss.

- Use AI as an initial filter only. Do not solely rely on its results for academic dishonesty cases.

- Bring in experts or teachers to evaluate flagged drafts. Human judgment adds context that machines lack.

- Keep clear records during investigations. Document findings from both AI tools and human reviewers for transparency.

- Educate students about how AI detects reused drafts. This encourages awareness and deters misuse without fear of false positives.

- Look out for both false positives and false negatives in flagged content. Balance technology with critical thinking to improve accuracy.

- Incorporate multiple AI writing tools during analysis, not just one detector software, for better insights into potential issues in the writing process.

Avoiding over-reliance on detection software

Leaning too much on ai detection tools can backfire. These tools often make mistakes, like false positives or false negatives, because reused drafts may not trigger the same flags as completely AI-generated content.

As of Spring 2023, many detection programs fail to live up to their accuracy claims.

Pair software with human judgment for better results. Teachers or reviewers should also look closely at students’ writing style and sources. Relying only on technology risks missing cases of academic dishonesty or wrongly accusing honest writers.

How to Avoid Being Flagged by AI Detectors in College

AI detectors are getting smarter, but they still have flaws. Students can avoid being flagged by following some simple steps.

- Write drafts in stages. Break your writing into parts and save each version. Showing a history of edits supports originality.

- Avoid copying text directly from AI tools. Paraphrase or add personal touches to stand out from AI-generated content.

- Use varied sentence structures. Repeated patterns might look suspicious, so mix short sentences with longer ones.

- Add unique details to your work. Include personal experiences or specific examples to make your writing less generic.

- Review the assignment instructions carefully. Understand the point of the task to avoid shortcuts that risk academic dishonesty claims.

- Check for “Trojan Horse” words hidden by instructors. Some teachers may insert uncommon phrases as traps for detecting AI use.

- Read through your final draft slowly before submission. Look out for awkward phrases that often show up in AI-generated text.

- Use detection software on yourself first, like Turnitin or Grammarly’s AI detection tools, to see how you score before submitting.

- Avoid over-relying on spell checkers or grammar tools alone to fix problems; these may create robotic-sounding sentences that flag detectors.

- Keep track of all sources if using research material; citing them properly reduces chances of being accused of dishonesty.

Conclusion

AI detection tools aren’t perfect. They struggle with reused drafts, often missing subtle patterns or flagging honest work unfairly. Pairing these tools with human oversight makes a big difference.

Teachers should compare writing styles and ask direct questions about the draft. Technology helps, but it still needs a human touch to catch everything.