AI detectors are everywhere now, but how accurate are they? Many tools claim high accuracy, yet there’s no standard to measure them all fairly. This blog will answer the question, “Are there industry standards for AI detector accuracy?” and guide you through key facts and issues.

Keep reading to learn what matters most!

Key Takeaways

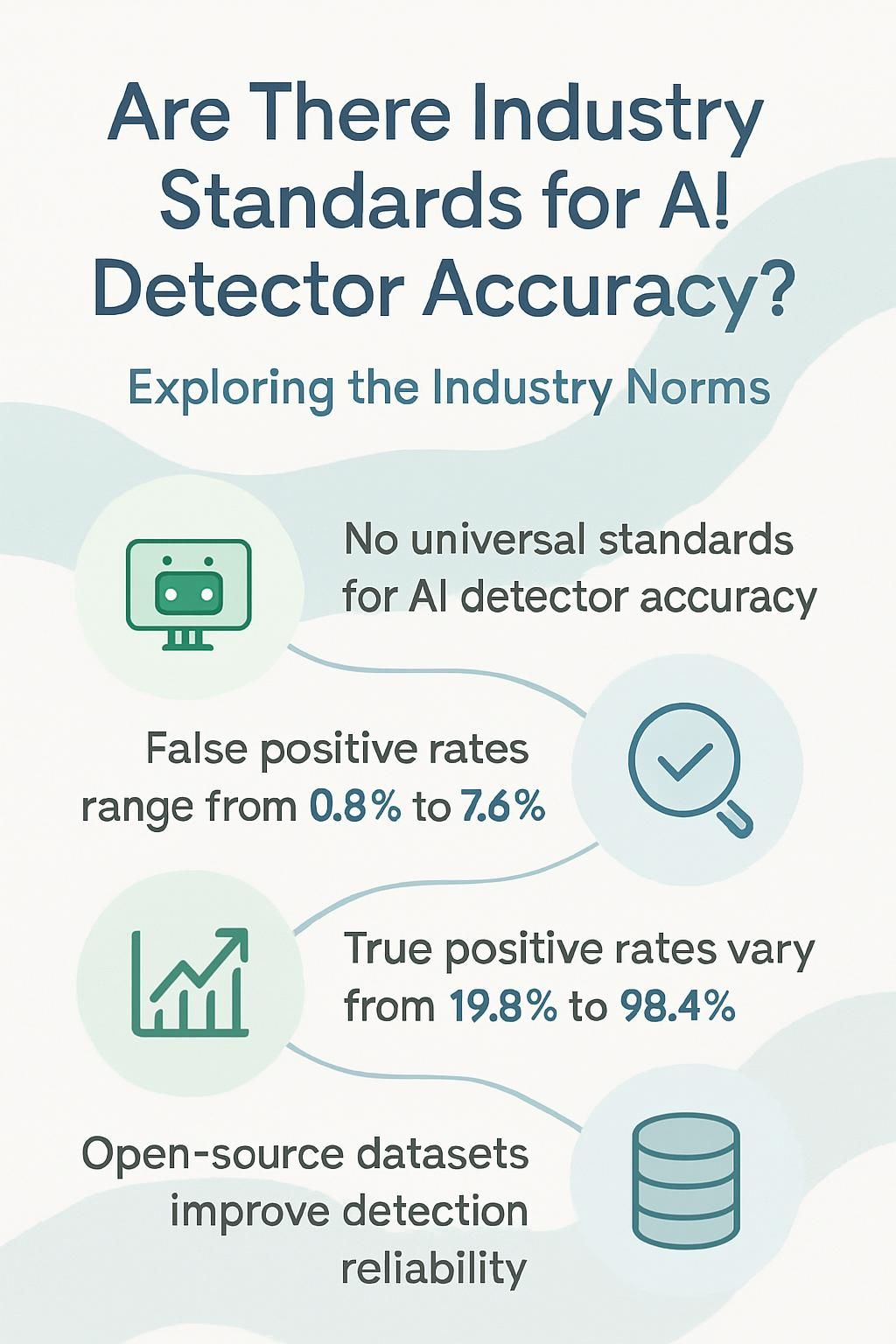

- There are no universal standards for AI detector accuracy. Tools like Originality.ai claim high accuracy, but results vary widely without agreed benchmarks.

- False positives and negatives create trust issues. For example, false positive rates range from 0.8% to 7.6%, unfairly flagging human work or missing AI-generated content.

- Metrics like True Positive Rate (TPR) and F1 Score help measure detector performance, with Originality.ai showing a TPR between 19.8% and 98.4%.

- Open-source datasets improve testing by using real-world examples like academic papers or tricky rephrased text to sharpen tools’ reliability.

- Detecting quotes in AI-written text remains challenging, leading to misclassifications that harm fairness in areas like academic honesty and intellectual property protection.

Current State of AI Detector Accuracy

AI detector accuracy is all over the map. Some tools seem sharp, while others miss the mark entirely.

Lack of universal benchmarks

Universal benchmarks for AI detection accuracy do not exist. Different tools claim varying levels of precision, but no consistent standards back these numbers. For instance, Originality.ai reported the highest F1 score in specific tests, while others scored as low as 32.9%.

The Federal Trade Commission (FTC) has even criticized companies making unsupported claims of over 99% accuracy.

Without agreed-upon metrics like true positive rates or false negatives, comparing tools becomes tricky. It’s a Wild West situation where each tool sets its own bar. This lack of uniformity leaves users uncertain about which AI content detectors to trust for tasks like identifying plagiarism or checking academic honesty.

Variability in reported accuracy rates

Different AI detection tools show wide differences in accuracy. For example, Originality.ai’s Version 3.0 Turbo claims a range of 90.2% to 98.8%. This gap raises questions about reliability and consistency across versions.

Some tools might exaggerate performance or fail in real-world scenarios.

Misclassifications often add confusion. A professor failing an entire class over false positive results is one such case. These errors spark debates on the trustworthiness of AI-generated content detectors and their impact on academic integrity or legal disputes.

Key Metrics to Evaluate AI Detector Accuracy

Accuracy isn’t just about catching AI-generated text—it’s also about sparing human-made content from false labels. Understanding these numbers clears the fog and shows how reliable a tool really is.

True Positive Rate (AI text detection)

True Positive Rate (TPR) shows how well AI detectors catch AI-generated content. It’s the ratio of correctly identified AI text to all actual AI-written text: TP / (TP + FN). For instance, if a detector flags 80 out of 100 AI texts but misses 20, the TPR is 80%.

This metric helps measure an AI tool’s sensitivity.

Testing results vary widely. Originality.ai achieved TPR rates between 19.8% and 98.4% in Test #2 evaluations. A high TPR indicates better detection capability for generative AI content like outputs from large language models such as GPT-2 or OpenAI-powered systems used in academic writing or machine learning tasks.

True Negative Rate (human text detection)

Following AI text detection, spotting human-generated content plays a huge role. The True Negative Rate (TNR) measures this accuracy. TNR uses the formula: TN / (TN + FP). Here, “TN” stands for true negatives, where genuine human text is correctly classified as such.

“FP” refers to false positives when human-written words are wrongly flagged as AI-made.

Tools like Originality.ai have made strides in reducing errors. In its Version 3.0 Turbo update, false positives dropped to just 2.8%. This shows progress in minimizing misclassification of authentic writings, especially for areas like academic honesty and intellectual property protection.

“Accurately detecting authenticity fosters trust across industries.”

F1 Score and its significance

True Negative Rate measures human text detection, but F1 Score balances both precision and recall. It combines the True Positive Rate (TPR) and positive predictive value (PPV). The formula is simple: 2 x (PPV x TPR) / (PPV + TPR).

This score helps evaluate how well AI detectors handle mixed content.

Originality.ai scored highest in Test #2 with its F1 score compared to Winston.AI’s 52.0. Higher scores mean better accuracy for detecting AI-generated or human-written text. A strong F1 Score reflects reliable predictions, helping tools avoid misclassifications like false positives or negatives in AI detection tasks.

Industry Standards for AI Detector Accuracy

AI detection tools lack agreed-upon rules for accuracy. This makes comparing their performance feel like apples to oranges.

Existing frameworks and their limitations

Current frameworks for AI detection rely on three main approaches: feature-based, zero-shot, and fine-tuning. Feature-based methods focus on analyzing patterns in syntax or grammar but often miss lightly AI-edited content.

Zero-shot models attempt detection without specific training, yet they frequently produce false positives. Fine-tuning adjusts models using labeled examples, though it struggles with diverse human writing styles.

Many tools lack consistency due to varying training data and benchmarks. Some detectors fail at distinguishing between human-generated content and generative AI outputs like those from large language models (LLMs).

False negatives create gaps by letting some AI-written text slip through undetected, while high false positive rates unfairly flag genuine human work.

Need for standardized testing protocols

AI detector accuracy currently lacks clear, universal benchmarks. Some tools claim over 99% accuracy, but the FTC has warned against such unsupported statements. Without reliable testing protocols, results often vary wildly between AI detection tools, creating confusion for users.

Standardized testing could help compare true positive rates and false negative rates across platforms like Originality.ai or machine learning-based systems. This would reduce errors like mislabeling human-generated content as AI.

Clear guidelines also benefit sectors like academic integrity and plagiarism detection by ensuring fairness and trust in artificial intelligence assessments.

Open-Source Tools and Datasets for Benchmarking

Many open-source tools rely on shared datasets to measure AI detector accuracy. These resources help test systems against real-world examples, making the results more reliable.

Adversarial AI detection datasets

Adversarial AI detection datasets test how well tools spot tricky, AI-generated content. They include examples crafted to confuse detectors, like rephrased or blended text from human and AI sources.

These datasets challenge systems by pushing boundaries in precision and recall rates. Originality.ai Version 2.0 uses open-source benchmark data for improved accuracy against such tough cases.

Researchers rely on these datasets to sharpen detection models. They assess the true positive rate (catching AI content) and false negative rate (missing it). This step helps refine machine learning algorithms while addressing weak spots in identifying generative AI outputs.

Adversarial testing ensures progress toward better AI detection reliability across varied scenarios.

Benchmark datasets for academic and scientific content

Benchmark datasets play a key role in testing AI detection tools. For academic writing, Originality.ai showed 94.5% accuracy in spotting ChatGPT-generated text. It even reached 100% accuracy with Swedish student essays, proving its sharpness across different styles and languages.

Such datasets often include diverse texts like research papers or student assignments to mimic real-world scenarios. These collections help measure true positive rates for AI-generated content and true negative rates for human writing.

This ensures detection tools don’t overlook important details or flag genuine work unfairly.

Challenges in Establishing Industry Standards

Setting universal rules for AI detection is tricky. Missteps can lead to errors that hurt users and spark debates.

False positives and false negatives

A false positive happens when AI detection tools mark human-written text as AI-generated. This can cause issues, especially for non-native English writers or students striving to maintain academic honesty.

Originality.ai’s tests showed a 1% false positive rate in one example, while another test saw rates between 0.8% and 7.6%. Such inaccuracies may harm trust in these tools.

A false negative occurs if the tool misses an actual instance of AI-generated content. For example, Test #1 by Originality.ai recorded just a 0.02% false-negative rate, which suggests stronger precision here compared to catching human errors falsely marked as AI-based work.

Both outcomes highlight limits in current models’ accuracy regarding detecting large language model outputs versus genuine human-created content effectively!

Defining what qualifies as AI-generated content

AI-generated content refers to text or images created by machine learning systems like generative AI. These tools, such as large language models (LLMs), analyze patterns in data to produce human-like responses.

For instance, a chatbot may draft articles or essays based on prompts provided by users.

Distinguishing AI-generated from human-created content gets tricky. Some outputs blend both types, where humans edit an AI’s draft slightly. This makes identifying clear boundaries harder for detection tools.

As the next section explores, measuring accuracy is key to improving these detectors.

Comparing Popular AI Detection Tools

Some AI detection tools claim high accuracy, yet their results can differ greatly in real-world use. Tests often reveal gaps, showing both strengths and flaws in these systems.

Accuracy analysis of leading tools

Originality.ai showed strong results, scoring the highest F1 score in Test 2. It also had a wide range of true positive rates, spanning from 19.8% to an impressive 98.4%. Sapling.AI, on the other hand, lagged with an F1 score of only 37.9%.

These numbers highlight how some AI detectors handle text better than others.

Confusion matrices revealed gaps in reliability for many tools. While Originality.ai excelled at identifying AI-generated content accurately, weaker tools struggled more often with false positives and negatives.

This inconsistency creates challenges for users relying on these systems for academic integrity or business use cases like plagiarism detection or hiring tests.

Confusion matrix results from recent tests

Leading tools show varied results in confusion matrix tests. Test #1 revealed promising accuracy with a 1% false positive rate and just a 0.02 false negative rate. This means only 1 out of 100 human-written texts was wrongly flagged as AI-generated, while AI-generated content slipped through at an even lower margin.

In Test #2, things changed depending on the conditions. False positives ranged between 0.8% and 7.6%. Such variation highlights the challenge of balancing sensitivity and specificity in detection tools like Originality.ai.

High rates can mislabel legitimate work, raising ethical concerns for students and writers using these platforms to check their text quality or originality.

Best Practices for Evaluating AI Detectors

Test AI detectors on various writing styles, like essays or blogs. Use realistic content to see how well they spot AI-generated text.

Importance of using custom datasets

Custom datasets improve AI detection accuracy. They reflect specific contexts and needs, unlike generic benchmark datasets. Testing tools like Originality.ai with custom samples often gives more reliable results.

For instance, academic or creative industries benefit from evaluating tools using samples close to their real-world content.

Generic data can miss niche patterns or subtle differences in human-generated content versus AI-created text. Custom sets help identify incorrect classifications more effectively too.

Independent tests using specialized data provide clearer insights into how a tool performs under unique scenarios, making decisions smarter and outcomes sharper.

Conducting independent tests

Testing AI detection tools on your own can shed light on their accuracy. Use text samples from both human-generated content and AI-generated content. Include diverse types, like academic papers or casual blog posts.

This approach exposes hidden biases in the tool’s performance.

Run multiple tests using varied datasets to spot patterns. For example, some detectors struggle with detecting edits made by non-native English writers or distinguishing between paraphrased text and original writing.

Analyzing outcomes helps you pick reliable tools for specific needs, especially in areas like plagiarism checking or academic honesty monitoring.

How AI Detectors Handle Quotations in AI-Generated Text

AI detectors often struggle with identifying quotations in AI-generated text. Quoted sections, especially those from human-created content, can confuse detection tools. Some detectors mistakenly flag these as AI-written due to matching patterns or styles found in large language models (LLMs).

This creates false positives, which harms accuracy.

Tools need better algorithms to handle this issue. They should separate quoted material and analyze it differently from original sentences. For example, plagiarism checkers like Turnitin already highlight quotes for clarity but may fail when paired with generative AI systems.

Improving precision here is crucial for academic honesty and intellectual property protection.

Societal Impacts of Inaccurate AI Detection

False positives or missed detections can harm academic honesty, damage trust in AI tools, and create confusion for decision-makers—read on to see why accuracy truly matters.

Consequences of undetected AI-generated content

Undetected AI-generated content can spread false information quickly. Fake reviews on platforms like Snapdeal rose by 2,722% from 2018 to 2024. These fake reviews trick buyers into trusting bad products or services, damaging businesses and customers alike.

Job scams can also increase with bogus applications created by generative AI tools.

Academic integrity faces a major threat as students submit AI-written essays without detection. Schools struggle to identify original work versus machine-generated text. This lowers critical thinking skills and devalues honest human effort.

Combatting such issues requires better AI detection accuracy and reliable tools.

Ethical concerns around false accusations

False accusations harm reputations fast. A professor could fail a student if AI detectors wrongly flag human-generated content as fake. This damages academic honesty and the student’s trust in education.

Such errors raise questions about intellectual property rights too.

Non-native English writers face unfair risks. Advanced language patterns might trigger false positives from AI detection tools, making their genuine work appear fabricated. These mistakes hurt fairness and discourage creativity or open dialogue in learning spaces.

Conclusion

AI detectors are improving, but clear standards don’t exist yet. This gap creates confusion and inconsistency in how tools measure accuracy. Without common benchmarks, trust in these systems can waver.

To build confidence, developers must prioritize fair testing and transparency. Reliable AI detection is not just about tech—it’s about protecting fairness and integrity everywhere content is created.