Struggling to keep your writing style consistent? It’s a common issue, especially with so many tools creating content today. Can AI detectors check style consistency in writing? This blog will explore how these tools work and what they can (and can’t) do.

Keep reading to find out!

Key Takeaways

- AI detectors analyze patterns such as predictability and sentence variety to identify writing style consistency.

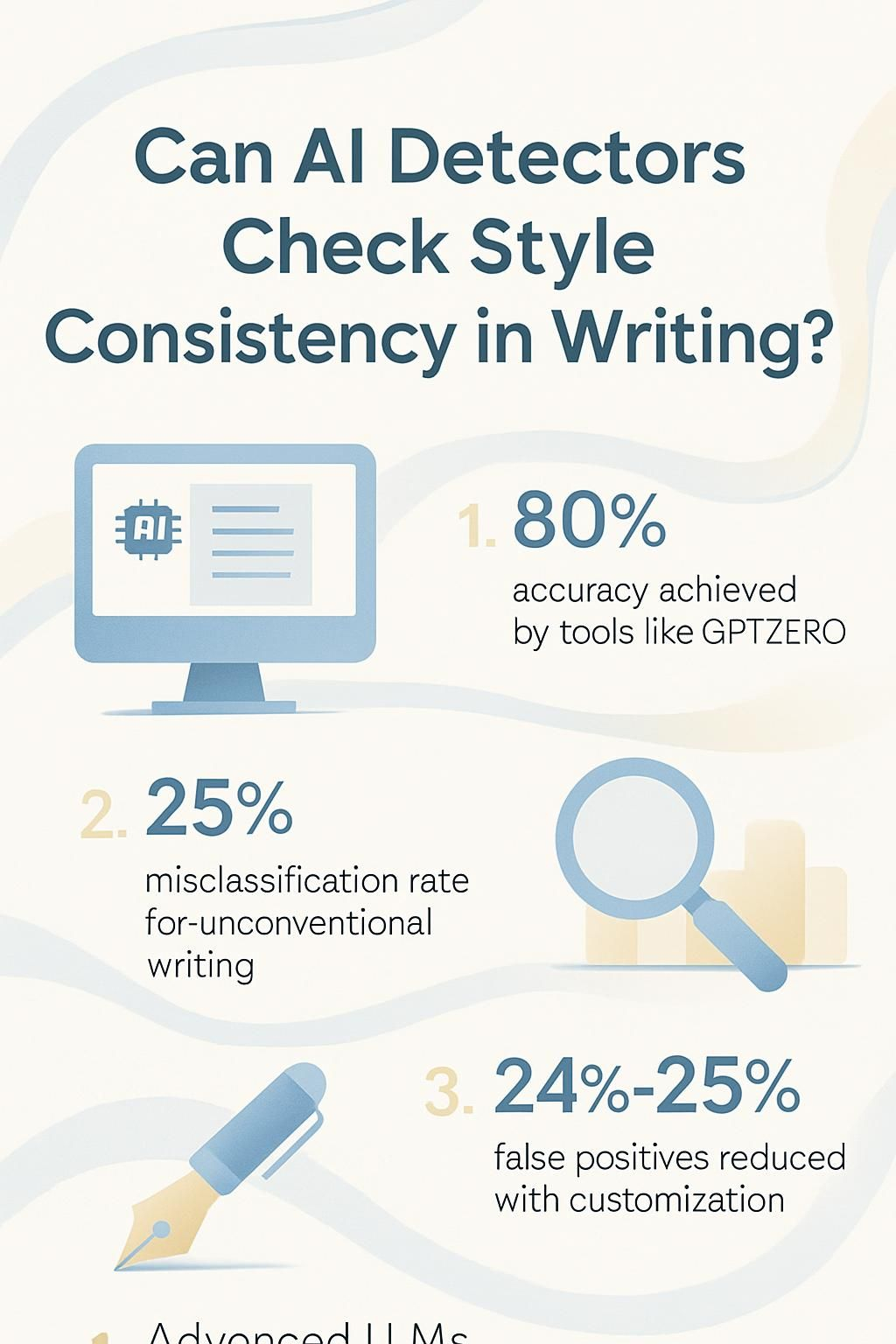

- Tools like GPTZERO achieve up to 80% accuracy but still misclassify creative or unconventional writing 25% of the time.

- Human-written texts mix tones and styles, complicating AI tools. Generative AI tends to be uniform, but advanced LLMs (e.g., GPT-4) make distinguishing even harder.

- Combining human review with AI detection produces better results. Manual editing identifies errors that machines often overlook.

- Adapting detectors for specific formats increases precision. Studies show customized training reduces false positives by about 24%-25%.

What Are AI Detectors?

AI detectors are tools that check if content was created using artificial intelligence. These tools analyze text for patterns, word choices, and sentence structures often linked to AI-generated content.

They also examine metadata like file history or writing speed.

Many AI detection tools use machine learning models like GPT-3.5 to make predictions. Their methods involve measuring “perplexity,” which shows how random the text appears, and “burstiness,” which tracks sentence variation in length or style.

Though useful, these detectors provide probabilities rather than proof of authorship.

How Do AI Detectors Work?

AI detectors scan text for patterns, quirks, and language flow. They use algorithms to spot irregularities that might reveal non-human writing.

Key methods used by AI detectors

AI detectors rely on advanced techniques to catch patterns in writing. They focus on elements like text structure, complexity, and style.

- Analyze perplexity: This checks how predictable a word is in a sentence. AI-generated content often feels overly consistent or unnatural.

- Examine burstiness: Burstiness measures sentence variety. Human-written texts mix short and long sentences, but AI texts tend to be more uniform.

- Review metadata: Detectors study hidden data about the file, such as editing history or timestamps. This can show if an AI tool generated the content.

- Pattern recognition: Machine learning allows detectors to spot repeated writing styles typical of AI tools like GPT-3.5 or GPT-4.

- Compare datasets: Detectors match text against databases of AI-generated examples to flag suspicious similarities.

- Check for overuse of common phrases: Generative AI sometimes repeats certain expressions unnaturally, which skilled detectors can identify.

- Identify unique formatting traits: Unusual spacing, punctuation use, or capitalization are signs some tools watch for in generative AI content.

- Test against academic integrity markers: For essays or exams, detectors verify originality by reviewing plagiarism risks along with stylistic checks.

- Assess tone shifts: Sudden changes in voice may indicate sections generated by different authors, possibly involving human-written and AI outputs mixed together.

- Use natural language processing (NLP): NLP breaks down grammar and meaning to compare how “human” the writing sounds versus predictive text patterns from large language models (LLMs).

Perplexity and burstiness in writing

Perplexity measures how predictable a text is. Lower perplexity means the words follow common, simple patterns, often seen in AI-generated content. Human-written text tends to have higher perplexity because people use varied vocabulary and unexpected phrasing.

Burstiness refers to shifts in sentence length and structure. Humans write with more burstiness by mixing short and long sentences naturally. AI text feels uniform, with steady rhythms that lack these spikes.

This difference helps tools like ai detection tools spot human-written content versus AI-generated text faster.

Humans think in waves; machines think in straight lines.

Can AI Detectors Analyze Style Consistency?

AI detectors can spot writing habits, like word choice and sentence flow. They compare these traits to check if the style stays steady across a piece.

Identifying patterns in writing style

Patterns in writing style involve analyzing sentence structure, word choice, and rhythm. AI detection tools can spot trends like repeated words or similar phrases. For example, variations in sentence length often help distinguish human-written content from AI-generated text.

Tools like GPTZERO have shown an 80% accuracy rate in identifying these traits.

Perplexity measures how predictable the text is, while burstiness checks for sudden shifts between short and complex sentences. These metrics reveal subtle stylistic differences between writers.

By focusing on such patterns, AI detectors compare texts to find signs of generative AI or evaluate consistency across documents.

Comparing human vs. AI-generated text styles

Human writing and AI-generated text often differ in style and structure. These differences arise due to distinct patterns in creation. Let’s outline the contrasts.

| Feature | Human Writing | AI-Generated Text |

|---|---|---|

| Sentence Variety | Often includes a mix of short, punchy sentences and long, flowing ones. Feels spontaneous. | Typically uniform, with balanced sentence lengths. Lacks natural fluctuation. |

| Word Choice | Depends on the writer’s tone, mood, and creativity. May use idioms or slang. | Sticks to formal or textbook-like language. Rarely includes idiomatic expressions. |

| Burstiness | High burstiness, with sudden shifts in rhythm and energy levels. Keeps readers on their toes. | Low burstiness, with steady pacing. May feel predictable or mechanical. |

| Context Awareness | Adapts to nuanced situations. Reflects personal experiences or knowledge. | Relies on patterns in training data. Struggles with hyper-nuance or context-heavy details. |

| Consistency | May vary depending on tone, purpose, or audience, making it feel dynamic. | Maintains a consistent tone, but this can feel rigid or bland over time. |

| Editing Impact | Texts revised by humans often appear polished, blending creativity with clarity. | Edited AI text might feel more natural, yet traces of uniform patterns remain. |

Human creativity stands out in its bursts and spontaneity, while AI outputs lean towards steadiness. Writing style differences can make one feel alive and the other, calculated.

Limitations of AI Detectors in Style Consistency

AI detectors often stumble with writing that bends rules or breaks norms. They also struggle to handle text with mixed tones or varying formats.

Challenges with creative or unconventional writing

Creative and unconventional writing often confuses AI detection tools. These systems are trained to spot patterns, but creativity breaks those norms. For example, using unusual metaphors or shifting tones can make human-written content look like it came from generative AI.

A Cooperman and Brando study revealed that detectors only hit 63% accuracy, leaving room for errors.

False positives pose another issue. Detectors misclassify intricate or imaginative text in up to 25% of cases. This creates problems for writers experimenting with style or format changes.

Tools meant for plagiarism detection may flag such content unfairly, making them unreliable for testing diverse styles in both schoolwork and professional settings.

Issues with diverse writing tones and formats

AI detectors often struggle with diverse writing tones. Human-written content can shift between formal, casual, or humorous styles, making pattern recognition harder for detection tools.

Generative AI text usually sticks to one tone or structure, which some detectors might flag incorrectly as human-made. Studies show that even top-tier AI detection tools like GPTZERO have an accuracy limit of 80%, highlighting these challenges.

Formats also add complexity. A blog post differs greatly from a text message or academic paper in style and layout. Plagiarism checkers and AI detection tools may misinterpret unconventional formats as errors or automation marks.

This inconsistency creates gaps where AI struggles to label writing accurately across various contexts and genres.

Advanced Technologies in AI Detectors

AI detectors now use smarter algorithms to catch writing patterns. They also study language with tools that mimic human understanding.

Machine learning for dynamic style detection

Machine learning improves how AI detectors spot style changes in writing. These systems analyze patterns like word choice, sentence flow, and rhythm to detect shifts. Advanced models, like GPT-4, make this process harder but also drive innovation.

Machine learning techniques let detectors adapt over time; they learn from new data to identify writing styles more accurately.

AI detection tools use algorithms to handle dynamic text styles. For example, perplexity measures predictability in sentences. Burstiness checks for sudden variations in tone or structure.

Despite these advances, studies show a 63% accuracy rate with AI content detectors so far. False positives still hit nearly 25%, showing there’s room for improvement as NLP technology advances further into identifying diverse expression forms properly.

Natural language processing (NLP) advancements

NLP advancements have made AI detection tools much smarter. These tools now study perplexity and burstiness, which reveal how predictable or varied text is. For example, human-written content often has bursts of creativity and flow that can seem less consistent than AI-generated content.

AI detectors also use machine learning to spot patterns in writing structure and style. This allows them to compare generative AI output with human-written material more effectively.

Such improvements help detect nuances in tone, choice of words, and sentence rhythm better than before.

Best Practices for Using AI Detectors

Pair AI detectors with human insight for sharper results. Tweak tools to match your writing style needs.

Combining AI tools with human review

AI detection tools can spot patterns, but they aren’t always perfect. Studies have shown that AI detectors like GPTZero hit around 80% accuracy. This means errors still slip through the cracks.

Human review adds a layer of judgment machines lack, especially for creative or complex writing styles.

Using both methods works best. For example, track changes in Office 365 can highlight edits for human reviewers to study alongside AI results. Combining these steps leads to better checks on style and tone without fully relying on algorithms alone.

Customizing detectors for specific writing styles

Tuning AI detection tools to match specific writing styles improves accuracy. By adjusting these detectors, users can analyze human-written content or AI-generated content with better results.

For example, a detector trained on formal language might struggle with casual Instagram captions. Customizing detectors allows them to understand diverse formats like academic essays, blog posts, or creative fiction.

Advanced machine learning models help refine this process. They adapt based on patterns in perplexity and burstiness within the text. This reduces false positives and negatives caused by unique tones or unconventional phrasing.

Studies show that current systems still misclassify 37% of cases, highlighting why customization is crucial for clearer analysis.

Alternatives to AI Detectors for Style Consistency

Manual editing can catch subtle tone shifts better than any tool. Professional editors bring a sharp eye and experience that technology can’t fully replace.

Using manual editing tools

Manual editing tools play a big role in checking writing style. They help spot tone issues, awkward phrases, and inconsistent formatting. Unlike AI detectors, these tools offer more control to the writer.

Tools like Grammarly or Hemingway Editor highlight errors while letting users adjust text manually for clarity.

Writers can also track specific rules for grammar and punctuation without relying solely on technology. For example, style guides used by professional editors ensure consistency across complex documents.

While useful alone, manual edits work best alongside AI detection tools to refine human-written content further. Next up is how professionals refine styles directly with human expertise!

Employing professional style editors

Professional style editors bring a sharp eye to writing. They catch issues AI detectors might miss, like tone mismatches or phrasing errors. Unlike machines, they understand context and creative nuances.

This is especially helpful with complex assignments or diverse content formats.

AI detection tools may flag false positives or negatives by mistake. Studies show their accuracy rate sits at 63%, which isn’t foolproof. Pairing these tools with human reviewers fills gaps in consistency checks.

Professional editors ensure the final text aligns smoothly with the desired tone and purpose without relying only on algorithms.

Future Possibilities for AI Detectors

AI detectors might become sharper with personal training, adapting to individual writing habits. They could also blend into writing tools, offering tips as you type.

Enhanced accuracy through personalized training

Personalized training boosts the accuracy of AI detection tools. By tailoring models to specific writing styles, detectors can better spot patterns in human-written content versus generative AI text.

For instance, studies by Liu et al. (2024) show that fine-tuning these tools improved performance by 63%. This reduces false positives, which currently range from 24.5% to 25%, making results more reliable.

Many writers use unique tones or sentence structures, so generic detectors may struggle with accuracy. Customized training helps AI understand such nuances better. With repeated exposure to specific formats or tones, the detector “learns” and adapts faster, improving its ability to fact-check and analyze consistency across different documents efficiently.

Integration with writing software for real-time suggestions

AI detectors can work alongside writing tools like Grammarly or Microsoft Word. These integrations provide real-time style tips, helping writers edit as they type. For example, the software might flag inconsistent tone or suggest changes to match a formal or casual voice.

Generative AI improves this process by learning patterns in human-written content. It adapts suggestions based on specific needs, whether for essays or reports. Combined with plagiarism detectors, these tools offer better accuracy and immediate feedback during writing sessions.

Will Future LLMS Make AI Detection Impossible?

Advanced LLMs like GPT-4 are pushing the limits of AI-generated content. They create text that mimics human writing so closely it can fool detection tools. These models, using smarter algorithms and larger training data, reduce signs like repetitive phrases or fabricated facts.

As a result, AI content detectors struggle to catch them accurately.

Studies show that some detectors hit 80% accuracy while others linger at just 63%. But with future models improving even more, these rates might drop further. Generative AI will likely blur lines between human-written content and machine-produced language styles.

This could make spotting patterns harder for detection software relying on existing methods. Detecting style consistency may require new approaches entirely in coming years!

Conclusion

AI detectors can spot patterns and flag unusual writing styles. They help compare human-written content with AI-generated text, but they have their limits. Creative or mixed styles may trip them up.

Pairing these tools with human review can improve accuracy. As technology grows, so will their ability to check style consistency—making them smarter, but not perfect.