Struggling to figure out the difference between plagiarism checkers and AI detectors? You’re not alone. Plagiarism checkers hunt for copied text, while AI detectors spot machine-made content.

This blog breaks it all down in simple terms. Keep reading to clear up the confusion!

Key Takeaways

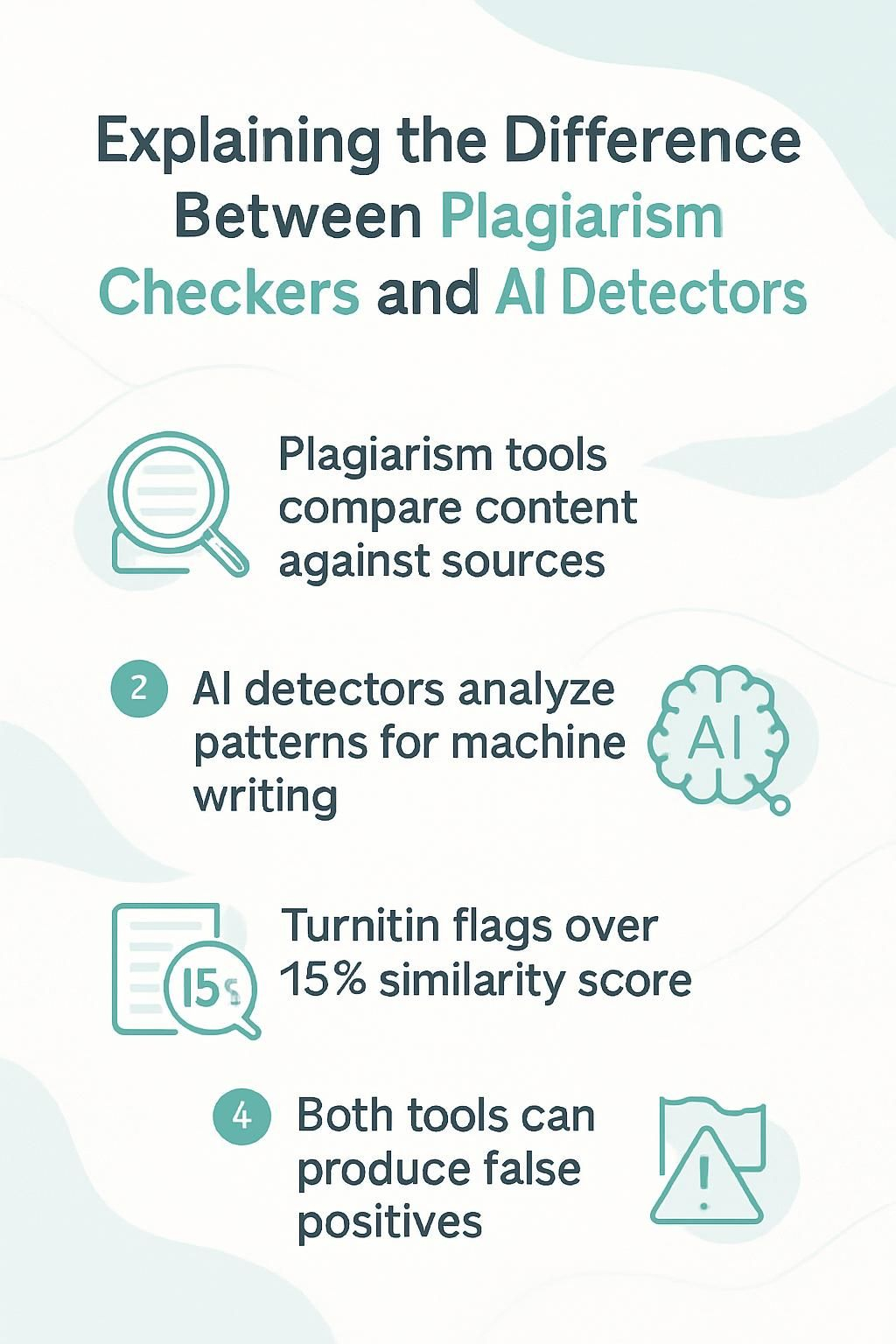

- Plagiarism checkers find copied text by comparing content against books, websites, and articles. AI detectors spot machine-made writing from tools like ChatGPT.

- Plagiarism tools promote originality by showing overlaps in existing sources. AI detectors analyze patterns to identify non-human writing styles.

- Turnitin allows a 15% or lower similarity score for academic work but flags higher percentages for potential issues.

- Both tools face false positives. Common phrases or human-written text can get wrongly flagged as plagiarized or AI-generated content.

- Combining plagiarism checkers and AI detectors provides better accuracy for academic and professional integrity checks.

How Plagiarism Checkers Work

Plagiarism checkers scan text for copied or unoriginal material. They compare the input against massive databases of books, websites, scholarly articles, and other sources on the internet.

These tools use machine learning algorithms to detect exact matches and cleverly paraphrased sentences. Some can even catch tricks like hidden characters or swapping synonyms.

Advanced tools such as Turnitin analyze patterns in writing to find similarities. For example, they might spot plagiarized content disguised by changing fonts or turning text into images.

However, these systems aren’t perfect; common phrases or widely known facts may trigger false positives during plagiarism detection.

How AI Detectors Work

AI detectors scan text for patterns only artificial intelligence might create. They analyze sentence structure, coherence, and word choice. Machine learning models help them spot these clues.

These models are trained on huge datasets of AI-generated content and human-written text. For example, OpenAI’s GPT tools often leave a digital fingerprint in how they arrange sentences or repeat certain phrases.

Such systems must adapt quickly to keep up with new generative AI tools like ChatGPT or Microsoft Copilot. Developers regularly update algorithms as newer AI versions improve at mimicking natural writing styles.

Detecting machine-made language requires both speed and precision because advancements happen fast. Missteps can lead to false positives where human-created work gets flagged unfairly due to similarities in structure or style with older data logs of AI outputs.

Key Differences Between Plagiarism Checkers and AI Detectors

Plagiarism checkers spot copied text, while AI detectors analyze if machines wrote the content—two tools, two goals.

Focus on content originality vs. content generation

Content originality ensures academic integrity by focusing on whether the text is copied or improperly cited. Plagiarism detection tools, like Turnitin, scan for overlapping material in online databases and existing work.

These tools highlight unoriginal parts to maintain content integrity.

AI detectors, however, evaluate if the writing comes from machine learning models or a human mind. Originality means little here; it’s about identifying AI-generated text versus human-written text.

For example, AI plagiarism concerns arise when students submit essays written by chatbots like ChatGPT instead of their own thoughts.

Underlying technology and algorithms

AI detectors rely on machine learning algorithms to analyze text patterns and predict if content is AI-generated. These tools, like Originality.ai or Turnitin’s AI detection features, examine linguistic nuances, such as word choice, sentence flow, and syntax.

They are trained using datasets from AI models like ChatGPT to identify generated text with high accuracy.

Plagiarism detection tools compare content against vast databases of academic papers, digital publications, and online sources. Tools like Turnitin scan for identical or paraphrased matches in seconds.

While both systems use advanced technologies for analysis, their goals differ: one identifies replication of existing material; the other flags artificially crafted language structures.

Use cases in academic and professional settings

Plagiarism detection tools help students and researchers check for copied parts in their papers. They scan sources like books, articles, and websites to find matches. This promotes academic integrity by showing gaps in originality.

For instance, universities use Turnitin or similar software to meet strict guidelines on content authenticity. Professional writers also rely on these tools to avoid unintentional plagiarism before submitting work.

AI detectors focus on spotting machine-generated text in essays or reports. Companies may use them to enforce rules against AI-written content, especially for blogs or marketing materials where human creativity is valued more.

PhD students face stricter policies, as overusing AI can reduce critical thinking skills. Both tools together provide better protection against unethical practices while maintaining high-quality output.

Challenges in Using Plagiarism Checkers and AI Detectors

Both tools struggle with accuracy, sometimes flagging innocent text as problematic. Ethical questions arise when relying too much on machine learning in academic writing.

Accuracy and false positives

Plagiarism detection tools and AI detection tools often flag false positives. Common phrases or general knowledge can get wrongly marked as plagiarized. For example, “The sky is blue” might trigger a match because it exists in multiple sources.

AI-generated content also risks matching plagiarism databases by accident. Cleverly rephrased ideas may still seem too close to original work for these systems. False flags like this can confuse users, making them doubt the reliability of these tools.

Ethical concerns and limitations

AI detection tools often misjudge human-written text as AI-generated, leading to false positives. This affects academic integrity and fairness. Rapid AI advancements make keeping up with accurate machine learning algorithms difficult.

Updating these systems demands many resources and time.

Bias in machine learning models is another issue. These biases can discriminate against certain writing styles or languages, creating unfair outcomes for non-native speakers. Over-reliance on such tools may discourage creativity and punish honest effort, damaging the spirit of originality in content creation.

Understanding Turnitin’s AI Detection Threshold in Essays

Turnitin scans essays for AI-generated content, like inputs from ChatGPT. It flags suspicious parts when machine learning models detect patterns common in non-human writing. Tools check text structure, word choice, and repeated phrases tied to algorithms used in text generation tools.

The system accepts a 15% or lower similarity score as safe in most academic settings. Scores higher than this may raise red flags over originality or improper use of sources. Turnitin also identifies tricks, like using hidden characters or converting words to images, ensuring more reliable results for maintaining academic integrity.

Best Practices for Using Both Tools Effectively

Use both tools together for smarter checks on content quality. This helps catch AI text and possible plagiarism in one go.

Combining tools for comprehensive content analysis

Pairing plagiarism detection tools like Turnitin with AI content detectors such as Originality.AI creates a stronger analysis. Plagiarism checkers scan for copied text by comparing it to sources.

AI detectors identify patterns in ai-generated content, like ChatGPT outputs, using machine learning algorithms.

For academic writing or digital publishing, this combo helps catch both human-written plagiarism and ai-generated text. For example, an essay flagged at 25% similarity on Turnitin could then be reviewed for AI usage thresholds through specialized tools, improving content integrity checks across platforms.

Guidelines for ethical AI and plagiarism prevention

Disclose AI tool usage in essays or research. If you use chatbots like ChatGPT or tools for data analysis, mention them clearly. Honesty builds trust and supports academic integrity.

Cite any ideas or text borrowed from AI-generated content as you would with human-written sources.

Use AI to help, not replace critical thinking. Let it enhance tasks such as drafting but keep decision-making and creativity human-led. Check your work through plagiarism detection tools like Turnitin and review results with peers for accuracy before submission.

This keeps content originality strong while respecting ethical writing practices.

Next: Understanding Turnitin’s AI Detection Threshold in Essays

Conclusion

Plagiarism checkers and AI detectors serve different purposes, but both play key roles in maintaining content integrity. Plagiarism tools spot copied or uncredited text by scanning databases of written works.

AI detection tools, on the other hand, analyze patterns to flag AI-generated content like that from ChatGPT. Using these tools together can help protect academic honesty and ensure originality in writing while addressing challenges like false positives.

For a deeper understanding of how Turnitin identifies AI-generated content, learn more about Turnitin’s AI detection threshold in essays.