AI detectors often flag human-written work as AI-generated, causing frustration for creators. These tools check traits like “perplexity” and “burstiness” to decide if content seems robotic.

This blog will explore how creators can prove their writing is truly human-authored, using simple but effective strategies. Keep reading to learn how to outsmart these tricky tools!

Key Takeaways

- AI detectors analyze text for patterns like perplexity and burstiness. Human writing has more variety, personal tone, and unpredictability than machine-generated content.

- High-quality human-written work can get flagged as AI-made due to being “too perfect” or repetitive. Raj Khera’s 2011 speech was marked 12% AI-written because of its structured style.

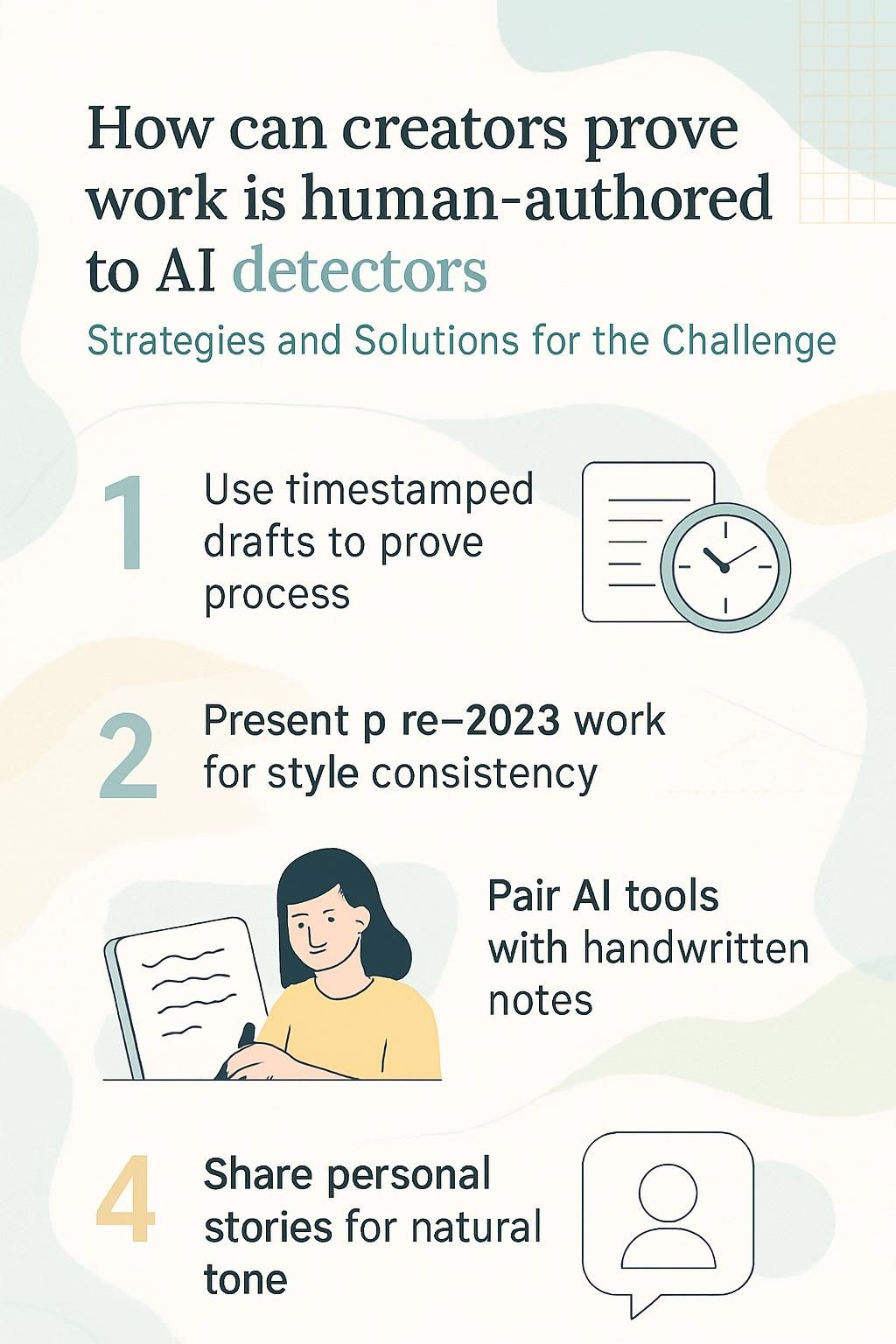

- To prove authorship, writers should use natural tones, share personal stories or experiences, and create timestamped drafts as proof of their process.

- Tools like Originality.ai offer 98% accuracy but still report false positives under 1%. Pairing these tools with handwritten notes adds credibility to the writing process.

- Authors can present older examples of their work before generative AI became mainstream (2023) to show consistency in style and originality over time.

How Do AI Detectors Work?

AI detectors scan text using complex patterns and machine learning models. They analyze sentence structures, word choice, and other features to identify if content might be AI-generated.

Perplexity and burstiness metrics

Perplexity checks how unpredictable text is. AI-generated content usually has low perplexity, meaning it feels too predictable. For example, “I couldn’t sleep last NIGHT” flows in a way machines often follow.

Human writing tends to have higher perplexity with odd word choices or unexpected phrasing.

Burstiness looks at sentence variety. Machines often write with flat, uniform lengths and patterns. Humans mix short sentences with longer ones, creating rhythm and contrast. A machine might say: “The cat sat quietly on the mat.” People might write: “The cat? Oh, it lounged on the mat—looking smug as always.

Limitations and reliability of AI detectors

AI detectors can misjudge human-written content. High-quality, well-structured writing often gets flagged as AI-generated. For instance, Raj Khera’s 2011 speech showed a false positive score of 12% AI-written.

Even polished essays or technical pieces may appear “too perfect” to algorithms.

False positives highlight the limitations of these tools. Scores below 35% are safer for publishing, while anything above 50% suggests likely AI use. Detectors rely on training sets and patterns but struggle with nuanced styles or personal creativity.

This makes reliability inconsistent across different types of content, especially in creative fields like copywriting or academic papers.

Common Reasons Human-Written Work is Flagged as AI

Writing that feels stiff or lacks personality can easily trip up an AI detector. Patterns that look too formulaic or repetitious might also send up red flags.

Overuse of formal or repetitive patterns

Using overly formal or repetitive phrases makes content appear mechanical. AI detectors, like those analyzing perplexity and burstiness, often mistake such patterns for ai-generated text.

For example, Raj Khera’s 2011 speech was marked as 12% AI-written because of its structured style.

Repeating similar sentence lengths or word choices lowers the natural flow of writing. Large language models (LLMs) often mimic these habits too well, flagging even human-authored work.

Breaking monotony with varied syntax and personal touches helps maintain authenticity while avoiding false positives by ai content detectors.

Lack of unique stylistic elements

Repeating patterns in writing can make content look like it’s AI-generated. AI detectors often flag work that feels too polished or lacks personality. Human writers naturally have quirks, personal expressions, and varying sentence styles.

A flat tone or overuse of formal language might also set off red flags for detection tools. High-quality human-written content with scores between 15% to 40% on these tests often suffers from this issue.

To avoid false positives, focus on adding natural variety and breaking free from rigid structures.

Next: Strategies for Proving Human Authorship

Strategies for Proving Human Authorship

Writing like a human takes heart, not just skill. Show your personality in your words, and back it up with proof of the process.

Write in a natural and personal tone

Writing naturally means avoiding robotic or overly formal language. Use your voice like you’re chatting with a friend. Share thoughts in simple words, add personality, and avoid trying to sound perfect.

For example, instead of saying “It is crucial to note,” say something authentic like, “Here’s the thing.”.

Throw personal touches into your work. Mention experiences that shaped your views or lessons learned from mistakes. These details make content more human-written and harder for AI tools to flag as machine-made.

Incorporate personal experiences or anecdotes

Sharing personal stories can highlight that your content is human-written. For instance, mention a memory tied to the topic you’re writing about. This adds authenticity and shows unique thought patterns that AI cannot replicate easily.

Emotional details, like how something made you feel or why an event mattered, stand out in stylometric analysis.

Talking about real-life challenges or funny mishaps makes readers connect with your work on a deeper level. AI tools lack true life experiences, so adding these anecdotes creates natural bursts of original content.

It’s harder for machine learning algorithms to flag such genuine creativity as artificial intelligence output.

Use original research and unique insights

Fresh research catches attention. Adding data, facts, or case studies into your writing makes it stand out. Include findings from recent studies or analysis of trends in artificial intelligence (AI).

For example, reports on how transformer architectures improve AI detectors give depth to your work.

Personal insights add more flavor. If you notice a pattern in AI detection tools like OpenAI’s results flagging human-written content incorrectly, share that. Discuss observations about common false positives or generative AI errors.

This keeps the piece engaging and informative while moving toward practical solutions for proving human authorship.

Avoid over-reliance on AI tools for final drafts

AI tools can polish writing, but leaning on them too much risks losing a personal touch. Over-reliance may create stiff or robotic text, which AI detectors often flag as machine-made.

Tools like ChatGPT might repeat patterns or lack human quirks that showcase individuality.

Drafts should start and end with human effort. Use AI sparingly for tasks like grammar checking or brainstorming ideas, not finishing the entire piece. Keep your tone conversational, add humor if it fits, and share real-life thoughts or stories.

Show authenticity in every sentence to stand apart from generative AI content.

Additional Tools and Techniques

Show your writing steps, use time-stamped drafts, or even share handwritten notes—it’s like leaving breadcrumbs for proof!

Timestamped drafts demonstrating the writing process

Using timestamped drafts is a powerful way to show human-written content. Tools like Google Docs automatically save every change, creating a clear revision history. These timestamps act as digital proof of your writing journey, showing edits and adjustments made over time.

AI detectors struggle to mimic the organic flow of a writer’s process. By exporting or sharing these draft histories, creators can support their claim of originality. This documentation helps protect against false positives from AI detection tools like Originality.ai, which boasts 98% accuracy but still has room for error.

Providing supplementary handwritten notes or outlines

Handwritten notes or outlines can serve as proof of human effort. AI detectors focus on patterns, but showing physical drafts adds credibility. Jot down key points in a notebook or scribble ideas before typing.

These notes display your thought process and creativity.

AI-generated content lacks personal touch or messy revisions seen in handwritten work. Scans or photos of these pages strengthen your case if questioned by detection tools like Originality.ai.

This approach highlights originality while addressing false positives effectively.

Using trusted plagiarism and originality checkers

Proving a text is human-written can be tricky, but trusted tools like Originality.ai help. This tool boasts 98% accuracy and reports less than a 1% false positive rate. It scans for AI-generated text while also checking plagiarism, making it double-duty protection for creators.

Using such software gives clear proof of originality in your drafts. Pair this with timestamped files or rough notes to make your case even stronger. These checkers identify copied content and flag unusual patterns often linked to generative AI tools, ensuring transparency in the content creation process.

Best AI Detection Tools for Academic Papers

Originality.ai stands out as a top choice. It claims 98% accuracy with less than a 1% false positive rate, making it a reliable option for detecting AI-generated text. This tool supports multiple file formats like PDF, .docx, and .txt.

Its features include keyword density checks and file comparisons. Users can also monitor content live in Google Docs.

Other tools like Turnitin focus on academic integrity by spotting AI-generated and plagiarized text effectively. Tools such as these help maintain trust in human-written content while ensuring fair use of generative AI during the writing process.

Addressing False Positives with Transparency

Sometimes, perfectly human-written content gets flagged incorrectly. Sharing your writing steps or earlier drafts can help clear up confusion.

Acknowledge any AI tools used during drafting

Admit any generative AI tools used in your work. Transparency builds trust and credibility. If ChatGPT, OpenAI’s software, or similar tools assisted you, mention them clearly. This helps address false positives in AI detection reports.

Use timestamps from platforms like Google Docs to show the human effort behind your content creation process. Pair this with supplementary handwritten notes or outlines for added proof of authenticity.

Be upfront to avoid harming SEO performance and maintain content quality.

Share multiple examples of past human-authored works

Authors can submit timestamped drafts showing their writing process. These drafts act as clear evidence of human effort. Include handwritten notes or outlines if possible, as they are hard to replicate by AI tools.

Sharing older published articles, blog posts, or essays also helps. For instance, content marketers could present past campaigns written before generative AI tools became mainstream in 2023.

This shows a consistent style over time. Writers can attach original research or unique story-driven pieces that match their previous work’s tone and flair.

Conclusion

Proving your work is human-authored can feel tricky, but it’s doable. Focus on writing naturally and using personal touches. Save drafts or notes to show your steps. If flagged unfairly, stay transparent about your process and share older works for proof.

With practice, you’ll sidestep AI detection errors like a pro!

For a detailed guide on the top AI detection tools suitable for academic papers, visit Best AI Detection Tools for Academic Papers.