Getting accused of AI cheating can be stressful for any student or administrator. AI detection tools often make mistakes, flagging real work as fake. This guide on how to contest AI detection claims with a dean will help you clear up misunderstandings and protect academic integrity.

Keep reading to learn simple steps to handle this issue smartly!

Key Takeaways

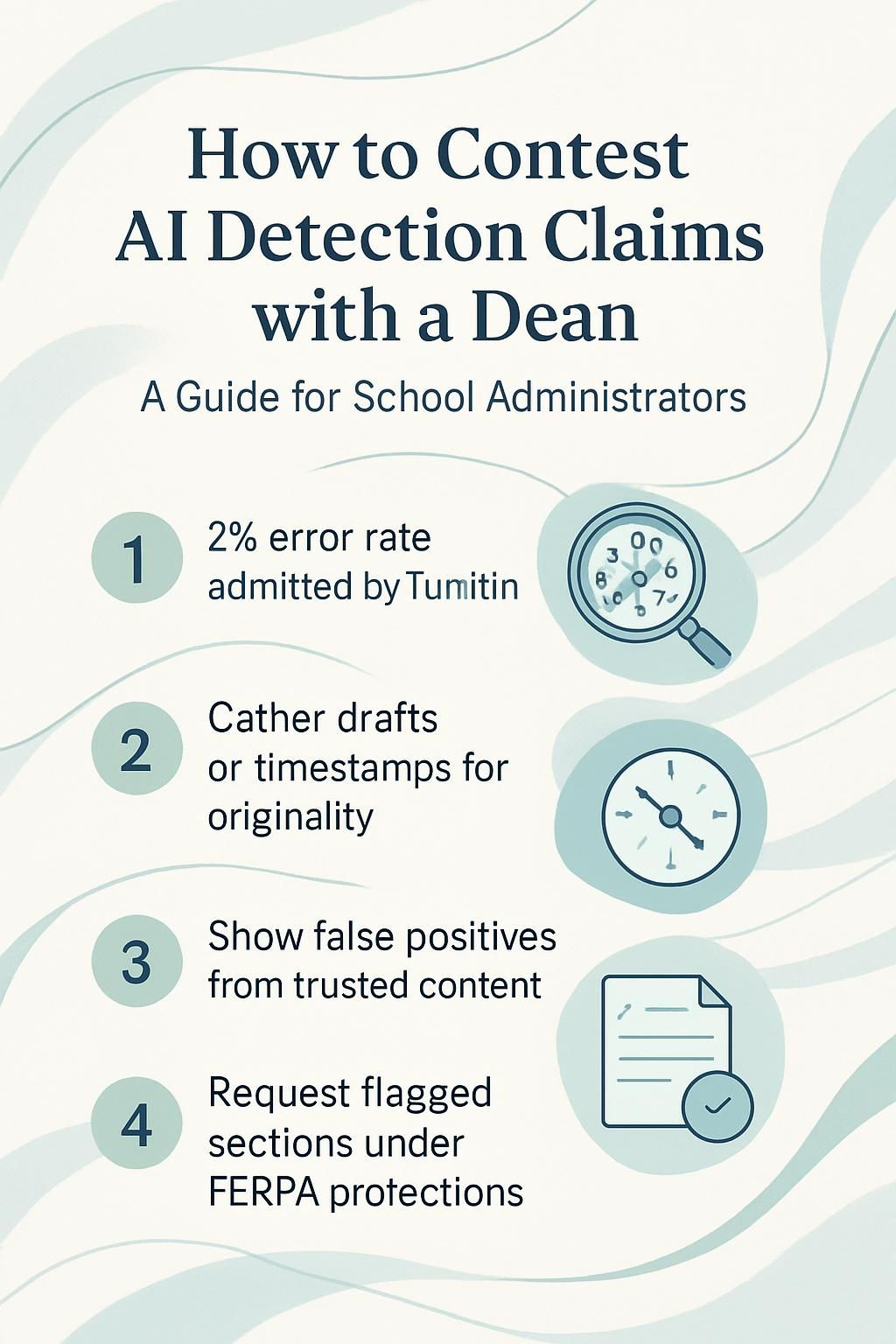

- AI detection tools like Turnitin, GPTZero, and Originality AI can mislabel original work as AI-generated. Turnitin admits a 2% error rate (1 in 50 students). Vanderbilt University stopped using Turnitin’s tool over reliability concerns.

- Gather drafts, outlines, research notes, or timestamps to prove originality. Use handwritten edits or class-specific examples during discussions with the dean.

- Highlight flaws in detection tools by showing false positives on trusted content like literary quotes or nuanced writing styles.

- Educate staff and students about the limits of AI detectors. Open communication builds trust and prevents unfair accusations of academic dishonesty.

- Legal defenses include requesting proof of flagged sections from the institution while challenging false claims with evidence under FERPA protections.

Understanding AI Detection Claims

AI detection tools often misfire, flagging wrong content as computer-made. Knowing how these tools work can help you spot their flaws.

How AI detection tools work

AI detection tools scan text to spot patterns linked to artificial intelligence. They look for unnatural phrasing, repeated sentence structures, and unique outputs that don’t match typical human writing.

Turnitin boasts a 98% accuracy rate in detecting AI-generated content like essays or assignments. Tools such as GPTZero and Originality AI examine word choices and predict whether software like ChatGPT wrote the content.

These systems rely on algorithms trained on millions of samples of human and AI-written texts. The tools compare submissions against these databases to assign probabilities or scores.

False positives can occur with creative or formal writing styles since they may resemble AI-generated patterns. For example, an essay written by a professor might unintentionally trigger a flag due to its polished style.

This is why evidence gathering becomes critical during disputes over academic misconduct accusations based on these technologies.

A tool’s output is only as good as the data it learns from.

Common inaccuracies in AI detection

AI detection tools often mislabel content as AI-generated. Turnitin, for example, has a 2% error rate. This means 1 in every 50 students could face false accusations of academic dishonesty.

Such mistakes can cause stress and harm reputations.

These errors happen because tools like GPT detectors rely on patterns and predictions. They sometimes flag well-written human work as AI-generated if it matches certain style markers.

Vanderbilt University even stopped using Turnitin’s tool due to these issues, questioning its reliability in high-stakes situations like exams or coursework reviews.

Steps to Address AI Detection Claims

Stay cool-headed and handle the issue with care. Use clear proof to back up your point, showing that the work is genuine.

Stay calm and approach the situation professionally

Take a deep breath. False AI cheating claims can feel jarring, but reacting emotionally may hurt your case. A calm approach shows professionalism and builds trust with the dean or other stakeholders involved in academic integrity cases.

Speak thoughtfully when addressing accusations of academic dishonesty. Avoid blame games or heated arguments that escalate tensions further. Instead, focus on finding facts and presenting them clearly.

As one educator said,.

Calm waters reveal clear reflections.

Review the institution’s academic integrity policy

Check the academic integrity policy carefully. Policies, like the University of Toronto’s Code of Behavior, explain what counts as cheating or plagiarism. These rules guide actions on issues like AI-generated content and false accusations of misuse.

Focus on definitions for terms such as fabrication or falsification. Look at how the school handles claims about academic dishonesty. Some policies list steps for evidence gathering or rights during disputes.

This can help you prepare strong arguments if an AI detector flags work unfairly.

Gather evidence to prove originality

Policies guide the process, but proof is key. Start by collecting drafts and outlines of the student’s work. Notes made during research or brainstorming help too. These show progress and effort over time, not something AI tools can replicate easily.

Previous assignments or essays might also support claims of consistency in style.

Ask for timestamps from digital files; these provide a clear timeline. Tools like Turnitin may back originality if plagiarism isn’t flagged there. False positives in AI detection are common, even with advanced technology like GPT-4 detectors.

Some systems misread phrases or patterns as “AI-generated content” without real cause. Trust hard evidence to counter weak accusations confidently!

Communicating with the Dean

Speak calmly, and show you are open to a fair discussion. Share clear proof of your work, showing possible flaws in detection software.

Present your evidence clearly

Show drafts, outlines, or research notes used in creating the work. Include personal experiences, such as specific class discussions or group projects not found in ai-generated content.

Bring typed and handwritten edits to demonstrate your process.

Point out errors in AI detection tools by showing false positives. Use examples of flagged trustworthy sources or classic literary quotes misidentified as ai-generated text. Keep all evidence organized for easy review during the meeting with the dean.

Highlight potential errors in AI detection tools

AI detection tools often flag false positives. Turnitin, for example, has faced criticism for labeling original work as AI-generated. This can happen due to vague patterns or similarities in sentence structure.

Some tools like GPTZero and AI Checker for Essays struggle with identifying creative or simple writing styles. They may confuse human-written text with machine output because of shared features like short sentences or common phrases.

Students using open education resources might also get flagged unfairly if content mirrors public information.

Tools and Resources to Support Your Case

Some AI detection tools may flag genuine work as artificial, causing confusion. Use proof and trusted systems to back your point.

Examples of AI detection tools with false positives

AI detection tools sometimes accuse innocent students of academic dishonesty. False positives can damage trust and hurt reputations, so it’s crucial to know which tools may misfire.

- Turnitin

Turnitin admits a 2% error rate, meaning about 1 in 50 students might face incorrect accusations. This happens because algorithms often flag complex writing or paraphrased ideas as AI-generated content. - Originality AI

Originality AI markets itself as 99% accurate but struggles with nuanced writing styles. For instance, creative language or unusual sentence structures might trigger a false positive in their system. - GPTZero

GPTZero claims to spot AI-generated texts, but common human habits like repetition or overly polished grammar can fool it. Students with advanced skills are sometimes flagged unfairly. - Content at Scale Detector

Content at Scale Detector flags writings that “sound too perfect.” Writers aiming for clean and concise work can unintentionally get accused due to the tool’s harsh standards. - Writer.com’s detector

Writer.com’s tool has issues distinguishing between human and bot-like phrasing in professional or academic tones. This is a problem for well-educated students using formal language. - Hugging Face Detector

Hugging Face offers an open-source detector but lacks fine-tuning for specific contexts like essays or research papers. It might flag technical terms or simple patterns as AI outputs.

Human oversight remains vital since none of these tools guarantee perfection in spotting real plagiarism cases effectively!

Alternative methods to verify originality

Sometimes AI detection tools are wrong. Use other ways to check if work is original.

- Compare the document with its version history. This shows changes made over time, proving a student’s progress on the assignment.

- Ask students to explain their work in person or through a quick video call. Honest answers often prove understanding better than any tool.

- Look for unique personal details or insights within the writing. These are difficult for AI to mimic and may confirm originality.

- Break big assignments into smaller parts with clear deadlines. This encourages steady work instead of last-minute reliance on chatbots.

- Use plagiarism-checking software like Turnitin or Grammarly alongside AI detection tools for cross-verification.

- Create short quizzes based on the assignment’s content to gauge if students actually know what they submitted.

- Assign oral presentations tied to written submissions, ensuring students can expand on their ideas verbally.

- Watch for sudden changes in tone, style, or vocabulary that seem out of character compared to prior submissions from the same student.

- Encourage peer reviews before submission deadlines, as group feedback helps spot inconsistencies others might miss.

- Focus on teaching proper research and writing techniques early on so students feel less tempted to plagiarize or misuse technology later.

Legal Defenses Against False AI Usage Accusations

Consult an attorney if serious accusations arise. Legal counsel can help protect rights under academic policy and student privacy laws, like FERPA. Institutions must follow their own rules on academic misconduct fairly.

Request proof of the alleged AI usage. Ask for the exact outputs from detection tools or flagged sections of work. Highlight any history of false positives in these tools, as many cases rely heavily on their reliability.

Challenge vague claims with clear evidence supporting originality. For educators or administrators facing disputes, this process protects both integrity and fairness within educational technology systems.

Preventative Measures for Administrators

Teach both students and staff the limits of AI tools, so false accusations don’t snowball into bigger issues.

Educate staff and students on AI detection limitations

AI detection tools often flag false positives. For example, Vanderbilt University stopped using Turnitin’s AI detection tool due to reliability issues. These tools may mislabel original work as AI-generated content, causing unfair claims of academic dishonesty.

Students and teachers should know these technologies are not foolproof. Tools like ChatGPT detectors rely on patterns in writing, which can sometimes match natural human writing styles.

Discussing these flaws openly can prevent unnecessary stress and promote fair classroom policies.

Encourage transparent communication in similar cases

Open dialogue builds trust in handling academic misconduct claims. Faculty, staff, and students should feel safe sharing concerns without fear of judgment or harsh consequences. Schools can establish clear reporting systems and train employees to address issues fairly.

Using anonymous examples from past AI detection errors can educate your community on false positives. Highlighting these cases shows the need for fairness before accusing someone of using AI-generated content unfairly.

Clear policies encourage honesty and prevent confusion during similar disputes.

Conclusion

Facing AI detection claims can feel frustrating, but the right steps make all the difference. Gather proof, stay professional, and use clear communication with the dean. Highlight flaws in AI tools and offer facts that support your case.

Schools must adjust to these challenges while protecting fairness for everyone. With patience and preparation, you can handle this effectively.

For more in-depth information on protecting your institution legally, visit our guide on legal defenses against false AI usage accusations.