Are you worried about how AI tools might impact your grades? Many students fear being unfairly flagged by AI detectors for using generative AI, even when they didn’t. This post explains the accuracy issues and ethical concerns tied to these tools, plus their risks for academic records.

Keep reading to learn why this matters and what can be done.

Key Takeaways

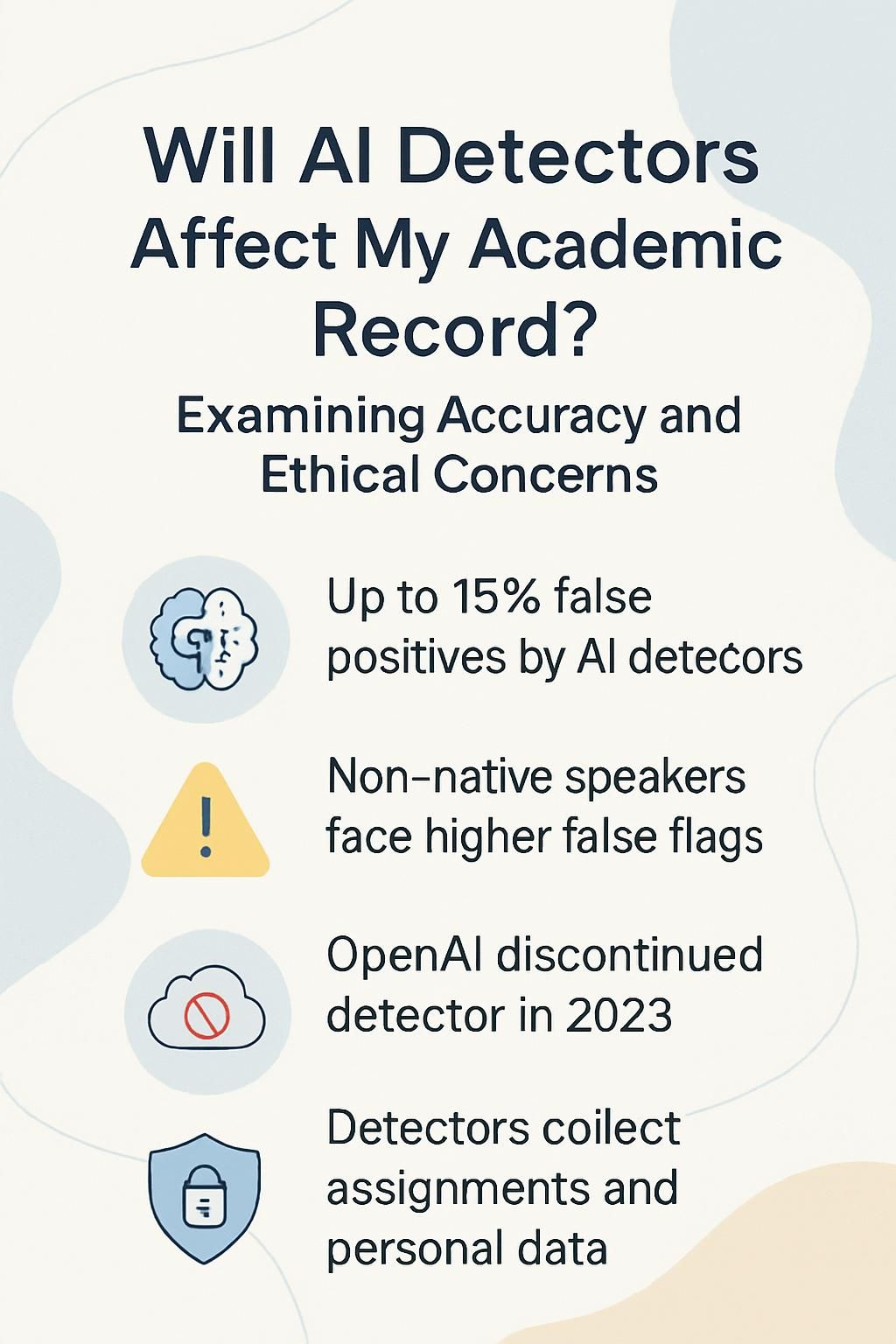

- AI detectors often make mistakes. They can wrongly flag up to 15% of human-written work as AI-generated (Perkins, 2024). This risks unfair academic penalties for honest students.

- False positives harm non-native English speakers more. Unique writing styles or simpler language use may trigger AI flags unfairly, creating bias and inequality.

- Relying solely on AI detection is risky. OpenAI shut down its detector in 2023 due to poor accuracy. Professors should not give zeros based only on these tools without more evidence.

- Student privacy concerns arise since detectors collect data like assignments and personal information. Lack of clear rules raises trust issues with schools over data use.

- Alternatives include creative assignment designs that inspire honesty, oral presentations, multiple-choice tasks, or open discussions instead of relying on flawed AI tools alone.

How Do AI Detectors Work?

AI detection tools spot patterns in writing. They check for signs of AI-generated content, like repetitive sentence structures and overuse of linking words. Generative AI often creates text with predictable lengths or simpler variations.

Detectors flag these features based on the likelihood they came from artificial intelligence.

Unlike plagiarism checkers, these tools do not compare direct matches to databases. Instead, they provide a percentage score showing how likely the text was created by an AI model.

For example, OpenAI’s detection systems analyze stylistic clues instead of exact phrases. These probabilistic checks mean there’s room for both mistakes and misinterpretations in their results.

Accuracy Issues in AI Detection

AI detectors can be like a double-edged sword, cutting both accurately and inaccurately. Sometimes, they flag something as AI-written when it isn’t, leaving students in messy situations.

False positives and negatives

False positives accuse students of using AI tools, even if they did not. Current AI detection tools have a 15% false accusation rate, according to Perkins in 2024. In one study of 805 samples, errors unfairly labeled human-written work as AI-generated.

This poses risks for students who write original content but face action for academic misconduct.

False negatives happen when AI-generated text passes as authentic writing. Large language models like ChatGPT grow smarter, making detection tougher over time. Tools rely on probabilities and cannot guarantee accuracy.

English language learners or non-native speakers are also at risk since their writing style may trigger flags mistakenly tied to AI use.

AI detectors don’t read minds; they calculate odds.

Evolving nature of AI models

AI models like GPT-4 keep getting smarter. They mimic human writing better than before, making it harder for AI detectors to spot them. Some tools struggle, especially with advanced models.

Detection weakens as creators use tricks to confuse the system. For example, Google’s Bard used to be easier to detect but became tougher after tweaks were applied. This constant change makes reliance on AI detection risky for academic integrity.

Ethical Concerns Surrounding AI Detectors

AI detectors often collect student data, raising questions about privacy. Plus, biases in these tools can unfairly target certain groups or writing styles.

Privacy and data usage concerns

AI detection tools often collect student data. This can include writing samples, personal information, or even assignment details. Sometimes, these tools store this data to improve algorithms or train AI models like large language models (LLMs).

Without clear rules, your work might be shared without your consent.

Non-native English speakers and English language learners face more risks. Their unique writing style could make them targets for unfair scrutiny. Students might not know how their information is used later.

This lack of transparency creates trust issues between students and schools over academic honesty policies.

Equity and bias in AI detection

Some AI detection tools unfairly favor English-first speakers. Students from non-native backgrounds, like many English language learners, might get flagged more often. This bias can hurt their grades even if they follow academic honesty rules.

Access to advanced AI tools also skews results. A student with better tech may escape detection while others using simpler resources get penalized. For example, Bob shows 100% AI use but isn’t flagged due to tool sophistication, unlike Alice at 85%.

These gaps deepen inequities instead of promoting fairness in education.

Implications for Academic Records

AI detectors can make mistakes, and those errors might leave a mark on your records. A flagged paper could lead to tough conversations with professors or unfair punishments.

Risk of unjustified academic penalties

False positives in AI detection tools can harm students’ academic records. Research on 805 samples showed significant errors, wrongly marking work as AI-generated. Honest students may face accusations of plagiarism they didn’t commit.

These mistakes impact grades and damage trust between students and educators.

Non-native English speakers often get flagged unfairly. AI detectors sometimes misinterpret complex sentence structures or unique language patterns as machine-generated content. This bias creates unequal treatment that negatively affects student well-being and academic integrity as a whole.

Long-term consequences for students

Mistrust in the academic system can leave lasting scars. A false accusation tied to AI detection tools might end up on a student’s record, marking them unfairly as dishonest. This stigma could hurt chances at college admissions or job applications later.

Students may lose faith in educators and institutions if flagged without proper evidence. Non-native English speakers often face bias from these tools, making their academic journey even harder.

These issues erode trust and motivation, harming learning experiences long after graduation day.

Can Professors Give Zero Based Solely on AI Flags?

Professors should not rely solely on AI detection tools to assign a zero. These tools often make mistakes, flagging work as AI-generated when it is not. OpenAI even shut down its own detector in 2023 because of poor accuracy.

Mistakes like false positives could unfairly impact non-native English speakers or students who express thoughts simply.

Giving a zero without other evidence raises fairness issues. Academic integrity decisions must involve critical thinking and careful review of the student’s work and writing style.

Relying only on technology may create distrust among students and lead to appeals from those wrongly accused. This highlights the need for fairer methods in assessing academic honesty disputes.

Alternatives to AI Detectors

Creative teaching methods, open discussions, and well-thought assignments can inspire trust and honesty in students better than any AI tool.

Encouraging academic integrity through assignment design

Tasks that spark curiosity help students stay honest. Focus on topics tied to real-life problems or personal reflection. For example, ask students to connect lessons to their daily lives or future goals.

This promotes intrinsic motivation and reduces the temptation of ai misuse.

Using a mix of assessments works well too. Pair written assignments with oral presentations or group projects. English language learners may thrive in formats like multiple-choice questions over essays, while others might prefer open-ended tasks for creativity.

This approach fosters equity and supports all types of learners.

Transparent and inclusive teaching practices

Teachers can encourage students to disclose AI tool usage through clear guidelines. Asking for process statements helps create trust and openness in the classroom. These simple steps make it easier to promote academic honesty without relying solely on AI detection tools.

Inclusive practices also consider non-native English speakers and their unique challenges. Teachers can use oral presentations or multiple-choice tasks that are harder for AI-generated content to replicate.

This approach supports fairness while boosting student engagement and motivation.

Conclusion

AI detectors are not foolproof, and mistakes happen. False accusations can hurt students’ academic records unfairly. This raises big concerns about trust, ethics, and fairness in schools.

Instead of relying on flawed tools, educators should focus on building honest learning environments. Open conversations and smarter assignments can do more good than these imperfect AI tools ever will.